Best Engineering Colleges in India Scholars Explained on Apache Hadoop

Hadoop or Apache Hadoop serves as a project of Apache Software Foundation and acts as open source type of software platform to allow distributed and scalable computing method. According to CS and IT scholars of best engineering colleges in India, Hadoop is able to deliver reliable and fastest possible analysis of both unstructured and structured data. In addition, based on its abilities to deal with sets of large data, one can often associated it with a phrase named as big data.

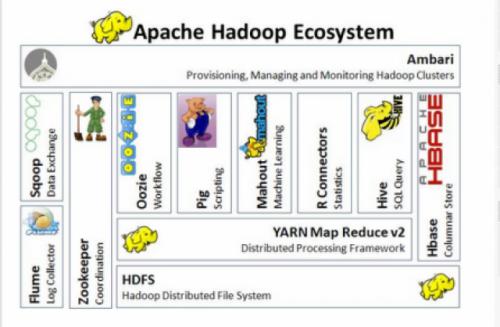

Apache Hadoop Library

Library of Apache Hadoop software is a framework that helps in distributed processing of various large-size data sets across huge clusters of computer systems via application of a simple and an easy programming model. Hadoop is able to scale up itself from various types of single online servers to large numbers of machines, each of which provides local level of storage and computation facilities.

Hadoop Distributed File System

IT and CS Scholars of best engineering colleges in India have further explained about HDFS or Hadoop Distributed File System, which is sub-project of the entire Apache Hadoop project. Developers have designed this project to provide a suitable fault tolerant type of file system to perform its functions on commodity hardware.

Apache Software Foundation Overview on HDFS Functions

According to the reports highlighted by Apache Software Foundation, HDFS works with the prime objective to store relevant data in reliable manner that too in presence of failures, such as network partitions, DataNode failures and NameNode failures. Here, NameNode is a type of single point for the failure of big HDFS cluster, while DataNode is responsible for storing of data within the big file management system.

Master/Slave Architecture of HDFS

HDFS mainly utilizes Master/Slave type of architecture that involves one device as master that controls a single or multiple numbers of devices referred commonly as slaves. The cluster of HDFS comprises of a single NameNode and a master as a server that manages namespace of file system and regulates access to various files.

Post Your Ad Here

Comments