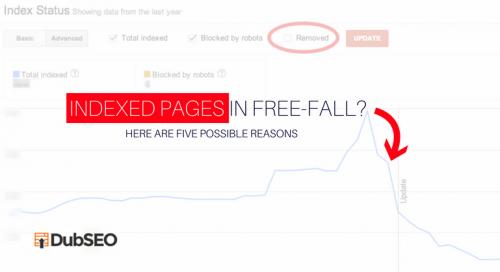

#5 Top Reasons Why Your Pages are Decreasing from Google Index

It is more essential than you may imagine

to have your webpages indexed by Google

(and also by the other search engines). Experts from a reliable online

marketing company in London tell that without indexing, your pages can’t rank

on SERP. But how do you know whether the pages have been indexed?

It is more essential than you may imagine

to have your webpages indexed by Google

(and also by the other search engines). Experts from a reliable online

marketing company in London tell that without indexing, your pages can’t rank

on SERP. But how do you know whether the pages have been indexed?

In order to be sure that Google indexing is done to your pages, you can follow this tried and tested procedure.

- Log into the site: operator

- Go to GSC (Google Search Console) and check the status of your XML sitemap submission.

- Simultaneously check the overall indexation status.

Now suppose, you experience a decrease in the number of indexed pages by Google. It is relevant to mention here that this is a common problem and one may have to face it anytime. There are mainly two factors to consider when you find your pages are not indexed:

- Either Google didn’t like your page or

- Its bot failed to crawl the page easily

As a result of the pages not being indexed, your indexed page count falls sharply. This indicates either:

- Google has slapped a penalty on you

- Google bot just failed crawling through your pages or

- It thinks the pages are irrelevant

Experts from one of the best seo agency services in London, offer you the following tips to get out of this soup.

- Make sure the pages are loading properly

You have to ensure that the pages have the correct 200 HTTP Header Status. Your server may also suffer from frequent or lengthy downtime. Maybe, your domain has expired recently and you’ve to renew it again. You can use any of the following crawling tools to determine the header status:

Screaming Frog, DeepCrawl, Botify, Xenu and others.

- Changing the URLs lately

It happens that a tiny, insignificant change in your CMS, server setting or anywhere at the backend programming results in a cascading change in your domain, subdomain and folder. This may result in change of the URL. Search engines have the ability to remember the old URLs, but those URLs are not properly redirected, your pages can be de-indexed. You need to get hold of the old URLs and map them at 301 redirects to the corresponding URLs.

- Duplicate content issue

Fixing the issue of duplicate content often involves implementing noindex meta tag, canonical tags, 301 redirects or even disallowing robots.txt. Experts associated with SEO services say, each of these factors can decrease the number of your indexed URLs.

This is a unique circumstance where the decrease of the number of your indexed pages is a positive thing. You don’t need to take any action to fix it because it’s good for your website. However, you can double check to ensure this is indeed the very cause for the decrease of your indexed pages and not any other factor.

- Timing out of the pages

Bandwidth restrictions are implemented on some servers. This is done to cut down the cost that is involved with higher bandwidths. Usually, there’s no need to upgrade such servers. In many cases it is found to be related to hardware issues. This can only be resolved by upgrading the hardware configuration particularly related to memory and processing.

- On the other hand, some websites block IP addresses when a visitor attempts accessing too many pages at a time. This is implemented to block DDOS hacking attempts, though it can also have a bad impact on your site.

- There’re multiple solutions to this problem. You have to choose the right option based on the reason causing the problem

- In case of server bandwidth limitation, maybe you should consider upgrade the service. If it’s a hardware issue, check if you already have any server caching technology implemented in the system. This will ensure lesser stress on the server.

- If there’s any anti-DDOS software installed, change its settings so that it doesn’t block the Googlebot any time.

How search engine bots see your site

Professionals involved in SEO industry in London say, there’re instances when a search spider sees your site differently than what people see. Some developers build websites without caring much about SEO implications. Even a section of the SEO experts perform undesired steps in their attempt to cloak content and fool the search engines. Even hackers play their role in many cases to mislead the search engines.

The best way out in such cases is to avail the Fetch and Render feature from Google Search Console. It tells you whether the Googlebot is seeing the same content that you’re seeing on your site.

Indexed pages are not counted as typical KPIs

KPIs or Key Performance Indicators help measuring the success of any SEO campaign. These indicators usually revolve around keyword ranking and organic search traffic. KPIs don’t focus on business goals, which are linked to revenue.

If the number of your indexed pages increases, there’s higher possibility of ranking more keywords in SERP. This improves your business prospect. There’s no way for your pages to secure ranking unless the bot is able to see, crawl and index your pages. Thus, the objective of looking at indexed pages is to ensure the search engine bot is able to crawl and index the pages in the proper way.

DubSEO is a prominent SEO agency in London. The tips discussed above come from the experts working at DubSEO. Follow these tips to ensure the best returns on your effort.

Post Your Ad Here

Comments (1)

Anika Digital1

Professional Web Designer in UK

Anika Digital technical expertise in service in Web Design and Development, SEO, SMM, Graphic Design, Cyber Security and Customer service.

Technical factors: for a top position in Google, it will be necessary to prevent technical problems related to indexing, loading speed, duplicate content or Meta tags.

Authority: a domain needs “votes” in the form of links to gain authority. Otherwise, it will have little chance of ranking in Google. A link building strategy will be fundamental.

Releva